regularization machine learning meaning

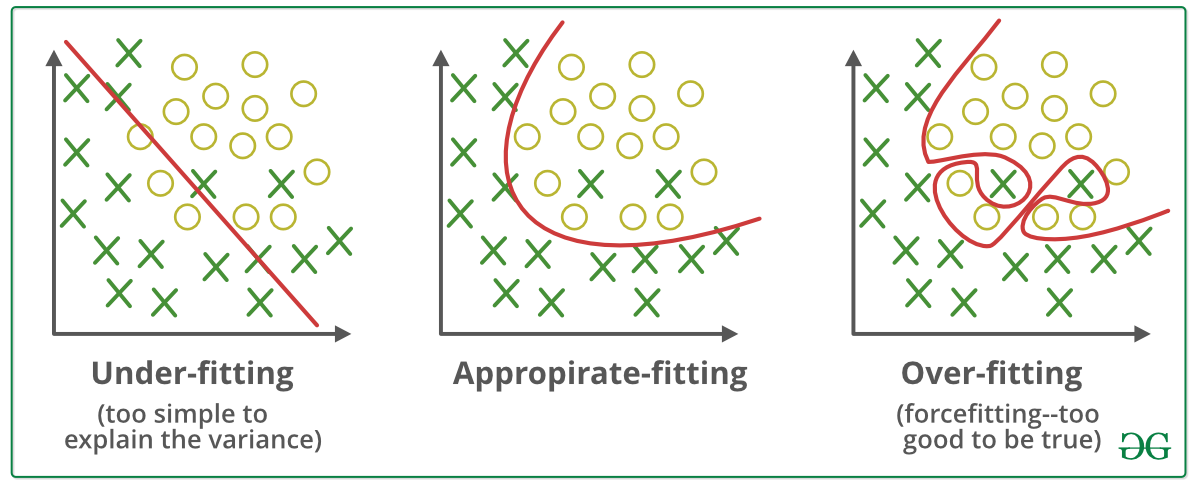

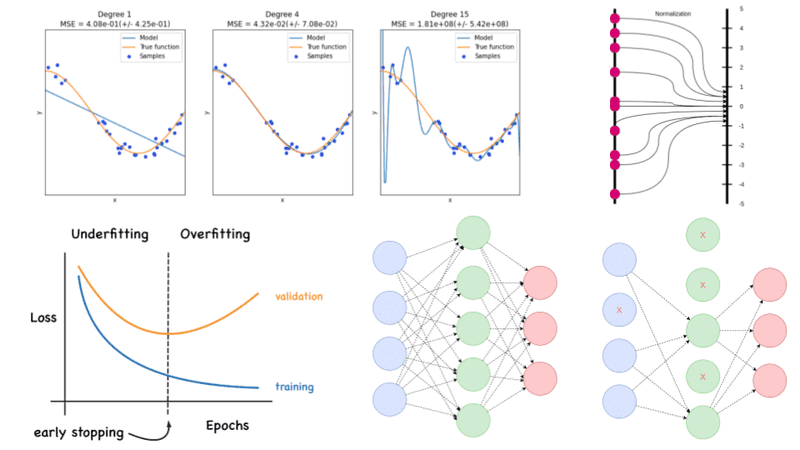

Regularization in Machine Learning What is Regularization. Regularization is the technique used to reduce the error by fitting a function appropriately on the given training set and avoid overfitting and underfitting.

Regularization In Machine Learning Simplilearn

Regularization is one of the techniques that is used to control overfitting in high flexibility models.

. To avoid this we use regularization in machine learning to properly fit a model. Regularization in Machine Learning is an important concept and it solves the overfitting problem. In other words this technique discourages learning a.

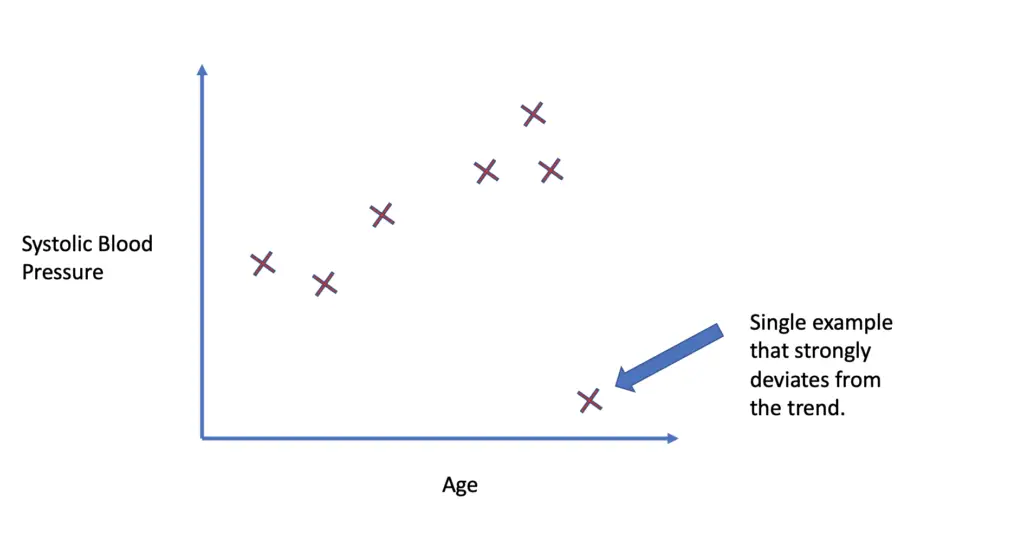

Both overfitting and underfitting are problems that ultimately cause poor predictions on new data. Overfitting is a phenomenon which occurs when. But here the coefficient values are reduced to zero.

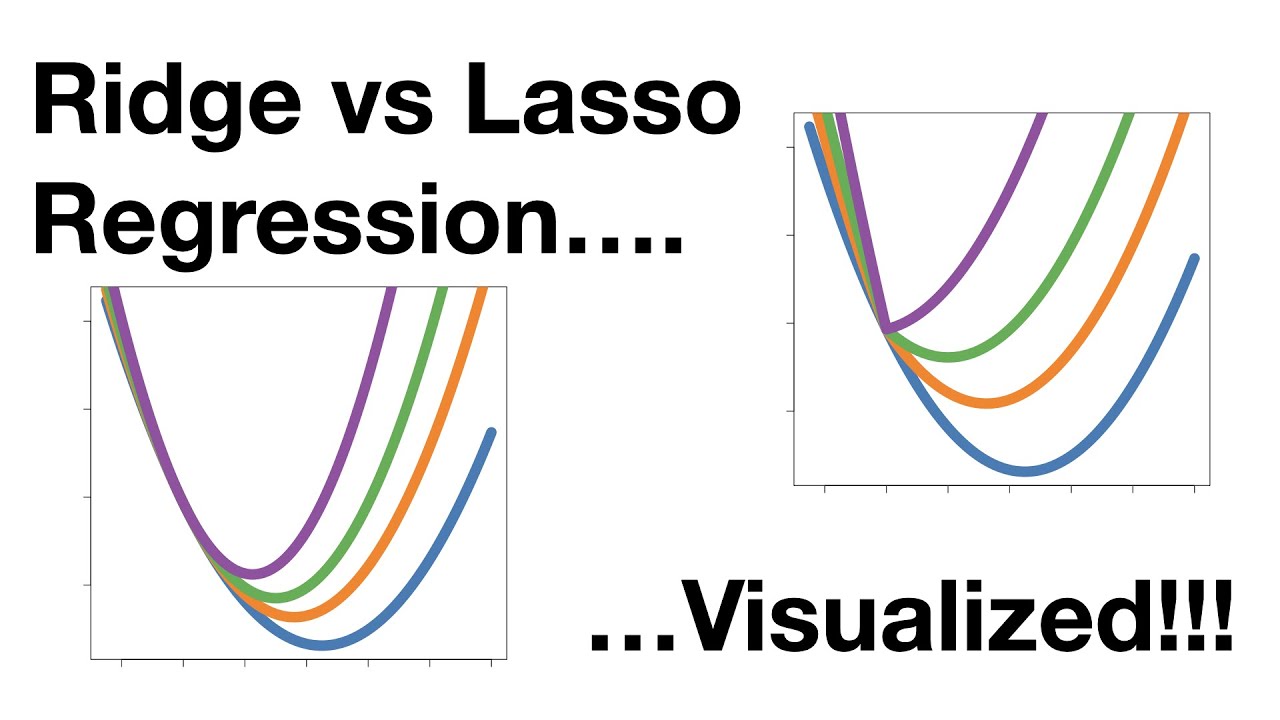

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. Regularization helps us predict a Model which helps us tackle the Bias of the training data. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. It is a type of regression. Dropout is one in every of the foremost effective regularization techniques to possess emerged within a previous couple of years.

In simple words regularization discourages learning. Regularization is any supplementary technique that aims at making the model generalize better ie. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting.

For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization. Regularization is a strategy that prevents overfitting by providing new knowledge to the machine learning algorithm. It is possible to avoid overfitting in the existing model by adding a penalizing term in.

This is exactly why we use it for. It is a technique to prevent the model from. Produce better results on the test set.

Regularization is a technique that reduces error from a model by avoiding overfitting and training the model to. Regularization is a technique which is used to solve the overfitting problem of the machine learning models. While training a machine learning model the model can easily be overfitted or under fitted.

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. This is an important theme in machine learning. Regularization is a method to balance overfitting and underfitting a model during training.

Definition Regularization is the method used to reduce the error by fitting a function appropriately on the given training set while avoiding overfitting of the model. Regularization means making things acceptable or regular. This might at first seem.

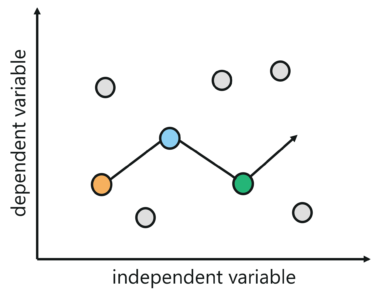

A simple relation for linear regression looks like this. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. In other terms regularization means the discouragement of learning a more complex or more.

Regularization refers to the modifications that can be made to a learning algorithm that helps to reduce this generalization error and not the training error. It is very important to understand regularization to train a good model. Regularization is one of the most important concepts of machine learning.

In machine learning regularization is a procedure that shrinks the co-efficient towards zero. The ways to go about it can be different can be measuring a loss function and. The regularization techniques prevent machine learning algorithms from overfitting.

Regularizations are techniques used to reduce the error by fitting a function. The word regularize means to make things regular or acceptable. In laymans terms the Regularization approach reduces the size of the independent factors while maintaining the same number of variables.

What Is Regularization In Machine Learning Techniques Methods

Tf Example Machine Learning Data Science Glossary Machine Learning Machine Learning Methods Data Science

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Programmathically

Learning Patterns Design Patterns For Deep Learning Architectures Deep Learning Learning Pattern Design

Implementation Of Gradient Descent In Linear Regression Linear Regression Regression Data Science

Regularization Techniques For Training Deep Neural Networks Ai Summer

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

Regularization In Machine Learning Programmathically

Regularization Of Neural Networks Can Alleviate Overfitting In The Training Phase Current Regularization Methods Such As Dropou Networking Connection Dropout

What Is Regularization In Machine Learning

Regularization In Machine Learning Simplilearn

Machine Learning Fundamentals Bias And Variance Youtube

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

Regularization In Machine Learning Regularization In Java Edureka

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Regularization In Machine Learning Regularization In Java Edureka